Technology may run on software, but software needs fuel. That fuel is data. And unless properly configured for a specific application, technology won't run very well. Like putting the wrong gas in your car makes it run badly (or not at all), bad input means bad output. Input in our scenario = data, and that makes clean data migration critical to the success of your ETL (extract, transfer, load) project.

The reality is that data migration is the last step in the process. We've sat through hundreds of data-mapping sessions and validated thousands of datasets. From that experience, we've learned a few things about data migration and its partner, data validation. In this post, we define the four big truths about data migration, but if you’re familiar with them, skip to the Readiness Quiz and find out if your team is truly ready.

Truth #1: Begin at the Beginning: Source Data

Data migration begins by understanding the source data. What does it represent? How is it generated? How is it used today? And what controls it? A new technologically advanced system may not fall into the same database storage or structure. So how much of the source data is really of use and that needs to be migrated?

If your business is migrating from legacy technology, it’s not uncommon for project managers to find out that only one or two individuals in the organization understand it’s data. This can cause massive roadblocks.

New technology needs its own version of data, called target data. What happens when a new application with new programs and new configuration rules starts running? You’ll quickly find out, and it could be unexpected. This is where data profiling is extremely beneficial.

Data profiling is a process used to define source data. It’s comprised of three definitional components:

- Accuracy

- Completeness

- Validity

We believe data profiling is essential to ensure a high quality ETL process. Data profiling can create the opportunity to cleanse your files of incorrect or unused data, modify conversion code, etc. This can be particularly useful if you’re migrating from a legacy system that has been in place for many years, as there could be thousands of errors in the data that will bog down the schedule with testing errors that can be eliminated ahead of time.

Manually performing this kind of data profiling is possible, but bringing in a data migration expert will accelerate your ability to pinpoint data issues through the use of sophisticated query tools. In addition, expert data profiling ensures that you’ve captured the most important data issues, especially those that might put your organization at risk post-conversion.

Correct profiling will filter out unnecessary data, extra validations and legacy data linkages, and dependencies.

Truth #2: How Good is Your Data?

Any data used should be considered high quality. "Data cleansing" is the process to improve data quality. Data cleansing represents both small changes, like ensuring dates are in the correct format, and complex changes, such as de-duping records. Data cleansing is often seen as an opportunity by organizations to clean up files before a technology conversion. However, defining, prioritizing, and executing against it can be a real challenge.

Below are some questions to guide your data migration team’s analysis:

- How much of your existing data is accurate & inaccurate?

- What are the data update intervals and which process(es) update the data?

- Is your data compliant with current regulations?

- Are data attributes consistent across all datasets?

- Should you convert all data, regardless if the original use case still exists?

- Are data formats consistent across all datasets ? (for e.g. Date Format, Currency Decimal places etc.)

Truth #3: The False Promise of Lift and Shift

Lift and shift, the process of simply lifting data from a current source and shifting to a new target, is a myth, and any guarantees that data migration from source to target will work using the lift and shift method are false. The truth is that successful data validation requires a thorough output mapping between the source and target systems.

Ensuring your team has a deep understanding of its new technology, either through vendor training or working with a third-party expert can be a critical factor to a successful conversion. Once completed, a comparison between the source and target system outputs is defined, variances noted, and rank ordered.

Now, armed with real information, your organization can better define its data validation scope. In our experience, time spent testing data without prioritizing data migration risk, is one of the major causes of implementation delays. It not only can cause delays, but also additional coding needs, additional regression testing cycles, and it increases the likelihood the product launch schedule will be interrupted.

Truth #4: Testing is Subjective

The truth is completely testing a new application before launch is unlikely. Going live and finding some degree of errors is very possible. We at Go-Live Faster understand the dynamics of financial institutions and the desire for an error-free implementation. These desires often result in over-testing where every individual field, attribute, and condition is a test case. This kind of approach is not only inefficient, but also time consuming and does little to improve implementation quality.

So what are the right things to test? This is where all the previous activities bear fruit. With a proper understanding of your source system data, assurance of data quality, and effective output-mapping, you’ll be ready to make these decisions with confidence. However, at this point in the process, if you still lack confidence, consider the following:

- If the target system is mature, consider presuming that all core functionality works as defined and focus on any customization or areas of high processing complexity.

- If the target system is immature, consider running the source and target systems in parallel for some period in order to focus testing on variances between the two.

- At a minimum, clean the source system data of inaccuracies, unused fields, and inactive records.

- Consider bringing in an outside domain expert to work in partnership with your team.

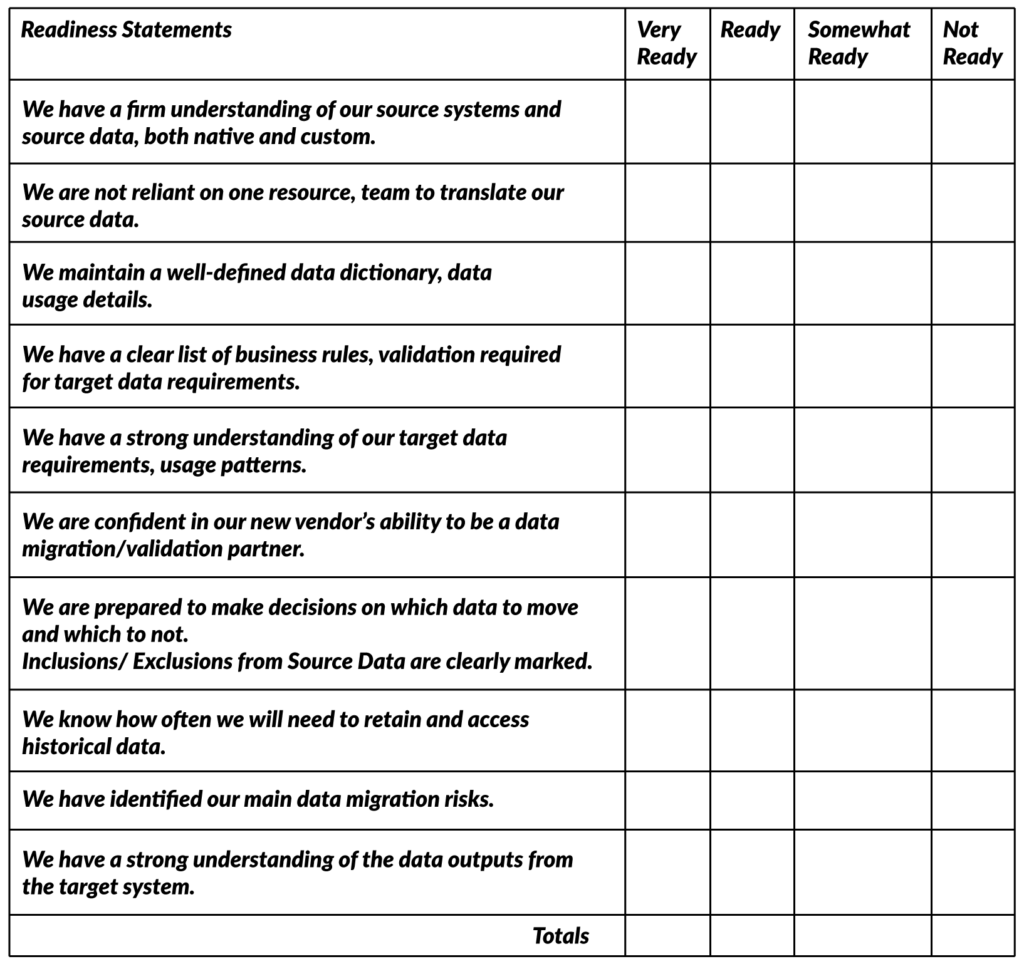

Are You Ready? Take the Quiz and Find Out

Below is our Readiness Quiz. It can help you determine how closely your perception of readiness is to actuality. Simply rate your readiness on a scale of Very Ready to Not Ready for each of the statements listed. Once completed, it will help you see more clearly the strengths and weaknesses of your organization relative to its Extract, Transfer, Load (ETL) plan.

Feel free to cut this quiz out and share it with your planning/steering committees. It’s curated based on our decades-long experience of guiding organizations to better understand their readiness for the large workload ahead of them.

In Summary

The four truths about data migration/validation are:

Organizations undertake data migrations for any number of reasons. These include everything from an entire system upgrade to establishing a new data warehouse to merging new data from an acquisition.

In today’s competitive environment, data is not only the fuel that drives your processes, but it’s the key to unlocking the value in new technologies. The question to ask yourselves is this: are you ready?

In our experience, we’ve found that organizations often under-estimate the effort to effectively migrate data and minimize data validation risks. This occurs when rigorous data quality protocols were either not put in place or have degraded over time. Another challenge is ensuring your team has access to knowledgeable domain and application experts that can effectively put data into its proper context for analysis.

Our Readiness Quiz should give you a better picture of how well your organization understands its source data and how prepared you are for the ETL. If you’d like to discuss its results with our team of experts, we’re here to help make sense of it all.